Backpropagation Programmer

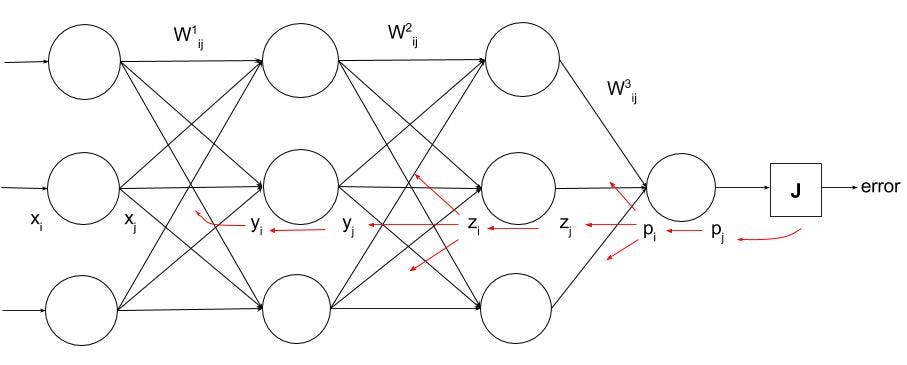

This paper presents an approach to learning polynomial feedforward neural networks (PFNNs). The approach suggests, first, finding the polynomial network structure by means of a population-based search technique relying on the genetic programming paradigm, and second, further adjustment of the best discovered network weights by an especially derived backpropagation algorithm for higher order networks with polynomial activation functions. These two stages of the PFNN learning process enable us to identify networks with good training as well as generalization performance. Empirical results show that this approach finds PFNN which outperform considerably some previous constructive polynomial network algorithms on processing benchmark time series.

This is a site all about Java, including Java Core, Java Tutorials, Java Frameworks, Eclipse RCP, Eclipse JDT, and Java Design Patterns.

Pixies Wave Of Mutilation Best Of Pixies Rar File. In the October 2012 issue of MSDN Magazine, I wrote an article titled “Neural Network Back-Propagation for Programmers”. You’ve probably heard the term neural network before. An artificial (software) neural network models biological neurons and synapses to create a system that can be used to make predictions. Examples include predicting football scores and predicting stock market prices. The basic idea is to use a set of training data (with known inputs and outputs) to tune the neural network — that is, find the set of numeric constants (called weights and biases) that result in the best fit of the training data. Then the neural network, using the best constants, can make predictions on new data inputs with unknown outputs.